Why Everyone Should Read AI Research Papers

In the world of AI product management, where the hype cycle spins faster than a GPU training the latest LLM, there's one practice I advocate strongly: read the research papers.

Ask any software engineer, and they'll tell you they can distinguish a good AI PM from a bad one based solely on how they talk about the technology. If you're building AI-powered products, skeptical about AI, or just tired of the hype, this is essential.

This advice stems from years spent building and launching AI products at Google, including 3 featured at Google’s 100,000 audience All-Hands). Some of these products wouldn't have gotten off the ground if I hadn't dug into the research and, crucially, connected with the researchers behind it. I've also learned directly from AI engineers, both at Google and elsewhere, that a high degree of technical literacy, not just enthusiasm, is what separates effective AI PMs from the rest.

Here's why diving into the research can greatly help you:

1. You’ll gain AI Literacy

Think of it this way: you wouldn't build a physical product without understanding the materials and manufacturing processes. The same logic applies to AI. You need a solid grasp of the underlying technology to be effective.

Reading research papers is about building a conceptual framework. It's about understanding the difference between "supervised fine-tuning" and "parameter-efficient fine-tuning" – and why that difference matters for your product. Some papers even includes the costs of these decisions. It's about knowing that "LLMs" and "LAMs" aren't interchangeable buzzwords. It's about having the vocabulary to communicate effectively with your engineering and research teams, and to set realistic expectations for what your product can achieve.

2. You’ll see the reality of AI's capabilities

The tech press loves a good AI breakthrough story. But those stories rarely tell the whole truth. Research papers, on the other hand, are often refreshingly candid about limitations. They'll discuss areas where models struggle, provide context for performance metrics, and highlight open research questions.

For example, I came across a paper that explored why large vision models, trained on synthetic data, were developing distorted "mental concepts" of everyday objects. The researchers had hypotheses, but the key takeaway for a PM was clear: understand the limitations of synthetic data. Knowing these "known unknowns" is crucial for making informed product decisions and avoiding costly mistakes.

3. You’ll make better decisions about AI

Let me share a story (without naming names). During the peak of the post-ChatGPT frenzy, I worked with a partner engineering team that was incredibly enthusiastic about building "autonomous AI" for customer service. Their vision was to replace human agents with AI, achieving massive cost savings.

Their roadmap projected a fully autonomous system within two years. The fundamental flaw? They hadn't grasped the complexity of the problem. They hadn't considered the need for a Large Action Model (LAM), the challenges of data acquisition and cleaning, or the limitations of current LLMs. They were essentially asking customer service agents to train their own replacements, a deeply problematic proposition.

A year later, that ambitious project had been scaled back dramatically. It's a stark reminder that blind optimism, fueled by a lack of technical understanding, is a recipe for failure. Overpromising and underdelivering erodes trust – with your team, your stakeholders, and your users.

I've heard similar sentiments from Google engineers and researchers. One researcher confided that their PM's lack of technical understanding was evident in presentations to leadership. This same PM asks the engineering team to corroborate their narrative in order to sell to leadership, but the research and engineering team members often find themselves in the awkward position of wanting to refute some of the claims the PM made about their new product. Enthusiasm for AI does not make up for lack of technical depth.

Another engineer described the frustration of a PM who couldn't grasp why their LLM could answer one question about Cloud instances but not another (the answer: a missing backend API call).

Optimism paired with lack of technical understanding is a recipe for disaster. It leads to overpromising, underdelivering, and ultimately, erodes trust in your leadership and the products you build.

4. Your stakeholders will thank you

How do you know if your AI launch is going well? How do you know if your models are ready to launch? Your executive stakeholder and sponsor won’t know whether your model’s “95%” factuality” score is a good thing or not. Is this an expected result? Is this the best you can get? Or is your fine-tuned model underperforming against expectations? Research papers can provide valuable insights into the latest evaluation techniques and benchmarks, helping you set realistic goals and track progress. It’s your job as an AI PM to bridge the gap between the esoteric research evaluation benchmarks and how the model is actually impacting the end user experience.

But don't get lost in the world of F1 scores and BLEU scores. Remember, your ultimate goal is to build products that delight users. It's your job to bridge the gap between esoteric research metrics and the user experience, demonstrating the real-world impact of your AI-powered product. At the end of the day, your product is there to serve users and improve their lives, not subject them to testing out a fancy LLM.

That said, you’ll often combine user-centric metrics like CSAT, NPS, average handle time, average resolution time, engagement/session duration, DAU, MAUs, etc with the AI research benchmarks to give a full picture of how your launch is going. It’s best to ground your model-centric metrics in what AI research uses.

5. You’ll supercharge your usage of AI

Research papers aren't just about understanding limitations; they're also a treasure trove of techniques and best practices. They often describe how the researchers achieved their results, providing valuable insights that you can apply to your own projects.

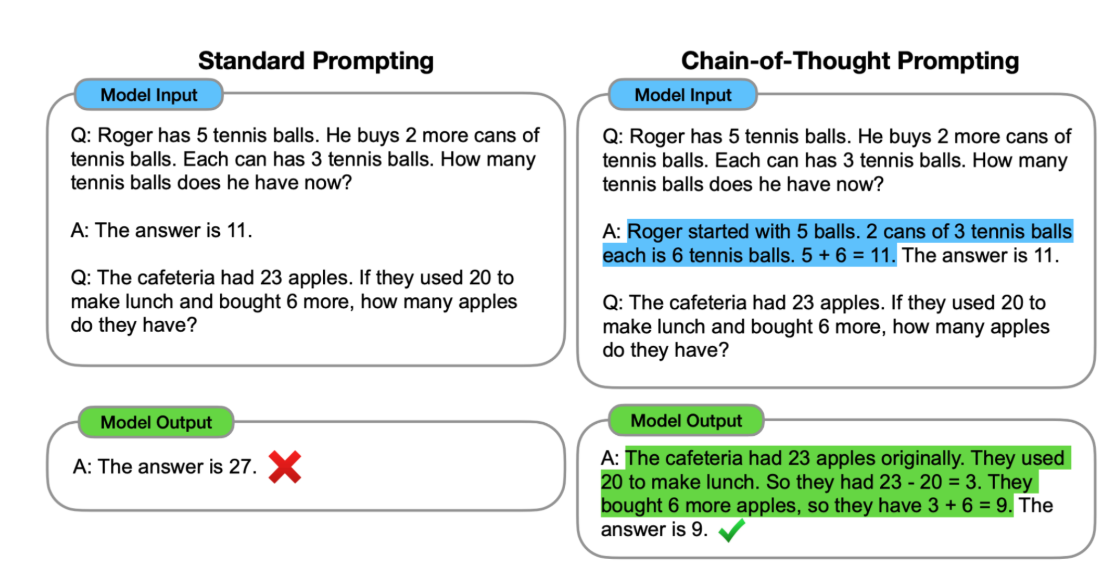

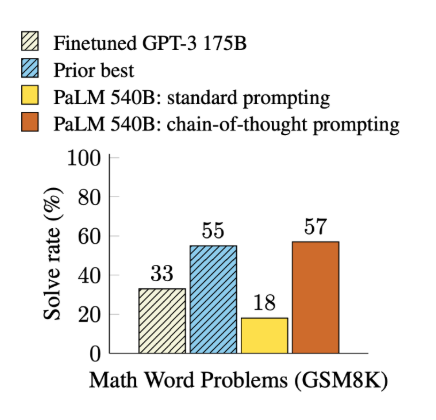

Take prompt engineering, for example. Instead of waiting for a tutorial or blog post, you can go straight to the source – papers like the one on GPT-3 – and learn about techniques like chain-of-thought prompting, and see exactly how they're implemented. This gives you a significant advantage.

The Bottom Line: Be a Student of the Field

In the rapidly evolving world of AI, it’s hugely beneficial to understand the foundations and ongoing breakthroughs of innovation. That means engaging with the research.

So, don't be intimidated by the jargon or the math. Start with the papers that are relevant to your product area. Look for summaries and blog posts that explain the key concepts - from researchers themselves. Be curious.

The rewards are significant: better products, more informed decisions, and a deeper understanding of the technology that's shaping our future. So grab a cup of coffee, open up arXiv, and start exploring the fascinating world of AI research. Your users (and your engineering team) will thank you.