An AI Practitioner’s Guide: LLM Research Breakthroughs and Implications

I’m sharing this piece because I love diving into research. There’s so much value in learning the methodologies, the insights, and fails from researchers directly working on Large Language Models. I believe all AI practitioners, enthusiasts, and skeptics should read AI research papers even if you aren’t technical, as it’ll help you see the fundamental limits and how to design for those for practical user experiences.

So here we go! Let’s dive into the seminal research that has driven this revolution, leading to models like Bard and ChatGPT. We'll focus on what makes each paper practically significant, linking the research to core LLM concepts like Retrieval Augmented Generation (RAG), Reinforcement Learning from Human Feedback (RLHF), Parameter-Efficient Tuning (PEFT), and Prompt Engineering – topics central to advanced LLM training.

And just in case you don’t want to spend 50 hours reading the seminal research papers, you can view the 2 hour crash course I’ve trained 500+ Googlers with. In case you don’t want to spend 2 hours in a crash course, you can read this 25 minute article below. And in case you don’t want to spend 25 minutes reading, here’s a TLDR:

A Trajectory of Innovation: From Translation Research to Transformers

Early NMT and Attention (Bahdanau et al., 2015): This is where the seeds of modern LLMs were sown. Before this, machine translation was largely statistical. Columbia University’s NLP class had us calculate probabilities of words appearing next by hand using lookup tables. Anyways, the attention mechanism was revolutionary. It allowed the model to dynamically focus on different parts of the input sentence when generating the output, mimicking how a human translator might work. My Take: This is fundamental. Attention is the key concept that separates modern NLP from what came before. It's the basis for everything that follows.

Google's Neural Machine Translation (GNMT, 2016): GNMT showcased end-to-end translation, a significant leap in quality. It used a deep LSTM network (8 layers!). My Take: This paper demonstrated that end-to-end deep learning could outperform traditional, hand-engineered systems. It was a proof-of-concept for the power of scale in neural networks. However, the need for separate models per language pair was a major limitation.

Multilingual NMT (Johnson et al., 2017): The idea of a shared wordpiece vocabulary across multiple languages was a clever hack to address the scalability problem of GNMT. It's a precursor to the idea of a "universal representation” - a suggestion in this paper, but a claim in GPT1. What surprised everyone was that a model trained to translate 12 languages worked better than a model trained just for 2 language pairs - implying efficiencies and emerging capabilities at larger model scales. My Take: This also highlights the importance of clever data representation. The seemingly simple trick of adding a target language token enabled a single model to handle multiple languages, a huge step towards efficiency.

The Transformer (Vaswani et al., 2017): "Attention is All You Need." This is the foundational paper. The Transformer architecture, with its reliance on self-attention and complete abandonment of recurrence, was groundbreaking. It enabled parallelization, leading to faster training and larger models. My Take: This changed everything. The Transformer is the architecture that powers almost all modern LLMs. The ability to parallelize training was a game-changer, opening the door to the massive models we see today. It's also conceptually elegant – attention is powerful.

““Language is remarkably nuanced and adaptable. It can be literal or figurative, flowery or plain, inventive or informational. That versatility makes language

one of humanity’s greatest tools — and one of computer science’s most difficult puzzles.”

The Rise of Pre-training and Fine-tuning

GPT-1 (Radford et al., 2018): This paper solidified the pre-train and fine-tune paradigm. The idea of training a model on a massive unlabeled dataset (in this case, unpublished fiction books) and then adapting it to specific tasks with smaller, labeled datasets was incredibly powerful. My Take: This is where transfer learning in NLP really took off. The use of a decoder-only Transformer is also important, setting the stage for the generative models that would follow.

BERT (Devlin et al., 2018): BERT's bidirectional context encoding was a major innovation. Unlike GPT, which only looked at the preceding context, BERT could consider both the preceding and following context. The Masked Language Modeling (MLM) objective was also clever. My Take: BERT showed the power of bidirectional context. The MLM objective is brilliant – it forces the model to learn rich representations by predicting masked words. This paper also emphasized the importance of pre-training objectives. We used this quite a bit pre-2022 in production launches.

GPT-2 (Radford et al., 2019): GPT-2 demonstrated that a large, unsupervised model could perform a variety of tasks without explicit fine-tuning. This was a hint at the emergent capabilities of LLMs. My Take: The "unsupervised multitask learning" aspect was significant. It suggested that large language models could learn general-purpose representations that could be applied to new tasks with minimal adaptation. The cautious release due to potential misuse also foreshadowed the ethical concerns that would become increasingly important.

Scaling Up: The Era of Massive Models

GPT-3 (Brown et al., 2020): GPT-3, with its 175 billion parameters, was a watershed moment. It showcased the power of scale and demonstrated impressive "few-shot" and "zero-shot" learning abilities. My Take: This paper really popularized the idea of prompt engineering. It showed that, with a sufficiently large model, you could often get good performance just by carefully crafting the input prompt, without any fine-tuning. This was a paradigm shift.

T5 (Raffel et al., 2020): T5's text-to-text framework was a unifying idea. It reframed all NLP tasks as text generation, simplifying the training process. The open-source dataset (C4) was also a valuable contribution. My Take: The text-to-text framework is elegant and simplifies the model interface. T5 also highlighted the importance of large, high-quality datasets.

MuM: An under the radar Model that was made public by Google in 2021, MuM was based on T5, and was trained on 75 languages. My Take: Although more details of this model would be helpful, I think it's really cool that it's a MultiTask model.

Addressing Limitations: Grounding, Safety, and Efficiency

LaMDA (Thoppilan et al., 2022): LaMDA focused on dialogue, a challenging domain for LLMs. It introduced strategies for improving groundedness (connecting responses to external knowledge) and safety. My Take: This paper highlighted the importance of moving beyond simple text generation. Grounding is crucial for factual accuracy, and safety is essential for real-world deployment.

InstructGPT (Ouyang et al., 2022): This paper demonstrated the power of Reinforcement Learning from Human Feedback (RLHF). By training a reward model based on human preferences, OpenAI was able to significantly improve the alignment of the model with human values. My Take: RLHF is a game-changer. It provides a way to fine-tune LLMs to be more helpful, honest, and harmless. This is a crucial step towards responsible AI development.

PaLM (Chowdhery et al., 2022): PaLM showcased the Pathways system, a significant advancement in training efficiency. It also demonstrated impressive reasoning capabilities, particularly with chain-of-thought prompting. My Take: Pathways represents a major step forward in distributed training, making it possible to train even larger models. Chain-of-thought prompting is another powerful technique for improving reasoning.

GLaM: An exciting paper, especially considering it's exploration into sparsity and the Mixture of Experts architecture, which is more efficient. My Take: I really like the exploration in this area. It's also exciting to think about how the model is more similar to how a human brain works.

GPT-4 and Beyond

GPT-4 (OpenAI, 2023): GPT-4 continues the trend of scaling up, with a focus on improved performance and safety. The technical report is less detailed than previous publications, reflecting the increasingly competitive landscape. My Take: GPT-4 highlights the ongoing arms race in LLMs. The emphasis on guardrails and safety is welcome, but the lack of transparency is concerning.

Practical Implications and Future Directions

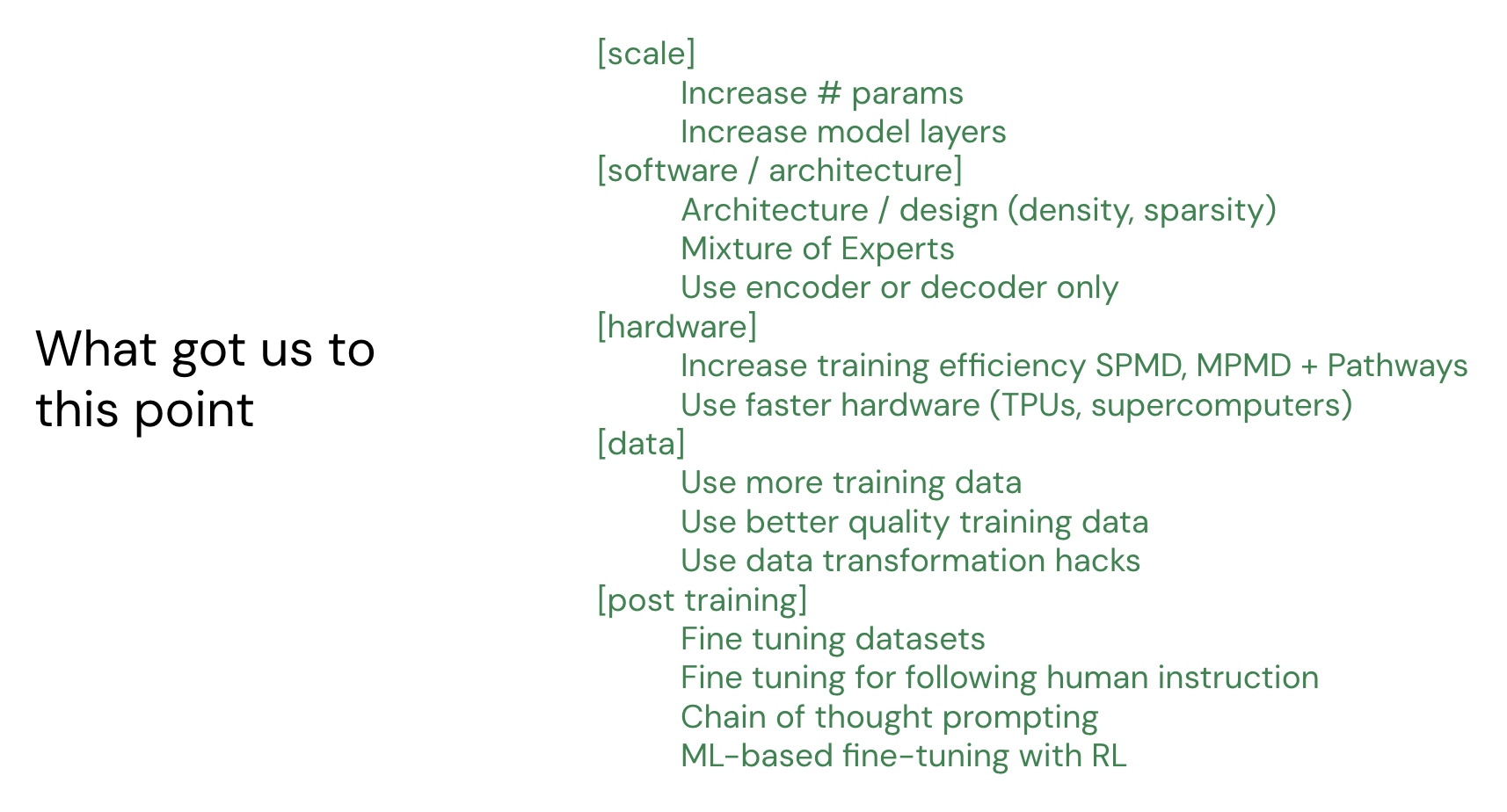

The research journey outlined above has significant implications for LLM practitioners:

Prompt Engineering: Mastering prompt engineering techniques (few-shot learning, chain-of-thought prompting) is essential.

Fine-tuning: Fine-tuning remains crucial for adapting LLMs to specific tasks and domains.

RLHF: RLHF is a powerful tool for aligning models with human values.

Retrieval Augmentation: Connecting LLMs to external knowledge sources (RAG) is key for factual accuracy.

Efficiency: Innovations in architecture (like Mixture of Experts) and training infrastructure (like Pathways) are critical for managing computational costs.

Data Quality: Dataset curation and engineering.

MLBizOps: A new approach to increasing organization-wide AI adoption.

Putting it All Together: A Practitioner's Perspective

So, what does all this mean for you, the LLM practitioner?

Prompt Engineering is King (and Queen!): Learn how to craft effective prompts. Experiment with few-shot learning, chain-of-thought prompting, and other techniques.

Fine-tuning is Still Your Friend: While large models can do amazing things with zero-shot learning, fine-tuning on your specific data is often necessary to achieve optimal performance.

RLHF is Your Ally: Use Reinforcement Learning from Human Feedback to align your models with human values and preferences.

Don't Forget Retrieval: Integrate your LLMs with external knowledge sources (RAG) to improve factual accuracy.

Think About Efficiency: Explore model architectures (like Mixture of Experts) and training infrastructure (like Pathways) to manage computational costs.

Data is Everything: Curate high-quality datasets, and consider data augmentation techniques.

Get to know how you organization works: Experiment with your organizational structure.

The LLM world is moving fast. Stay curious, keep learning, and don't be afraid to experiment! The challenges are real – scaling, data quality, ethical deployment – but the potential is enormous. And remember, it all started with attention...